Before you can dominate search rankings with great content and backlinks, you need a flawless technical foundation. A website plagued by crawl errors, slow speeds, and poor mobile optimisation is like a sports car with a faulty engine – it looks good but goes nowhere fast. This comprehensive technical seo audit checklist is your roadmap to diagnosing and fixing the underlying issues that prevent your site from reaching its full potential.

Think of it as a deep-dive health check for your website, ensuring search engines can crawl, index, and understand your content efficiently. A strong technical base is not a "nice-to-have"; it is the non-negotiable prerequisite for sustainable search visibility. Without it, even the most brilliant content marketing campaigns will struggle to gain traction, as Google and other search engines may be unable to properly access or interpret your pages. This leads to missed ranking opportunities and wasted marketing spend.

Whether you're a marketing manager, an e-commerce brand owner, or a startup founder, this guide provides the actionable steps you need. We will break down critical elements from XML sitemaps and robots.txt files to Core Web Vitals and structured data implementation. Following this checklist allows you to systematically identify and resolve the technical roadblocks that are holding your website back. This isn't just about ticking boxes; it's about building a robust, high-performing digital asset that search engines favour and users appreciate. This guide distils years of expertise into a practical, step-by-step process you can follow today to fortify your site's SEO foundation.

1. XML Sitemap Optimization and Submission

An XML sitemap acts as a roadmap for your website, providing search engines like Google and Bing with a structured list of all your important URLs. This file helps crawlers discover and understand your site's content more efficiently, especially for large sites, new sites with few external links, or pages with rich media content. A crucial part of any technical seo audit checklist is verifying that your sitemap is correctly formatted, regularly updated, and properly submitted.

A well-optimised sitemap should only contain your most valuable, indexable pages. It must exclude any URLs that are blocked by your robots.txt file, return error codes (like 404s), or are marked with a "noindex" tag. Including non-canonical URLs or redirects can send mixed signals to search engines and waste crawl budget.

Real-World Examples

Many platforms automate sitemap generation, simplifying the process for website owners. For instance, Shopify stores create dynamic sitemaps that automatically update as you add or remove products. Since version 5.5, WordPress has included a core sitemap feature, though plugins like Yoast SEO offer more advanced control. Large publishers, such as The New York Times, often use multiple, segmented sitemaps organised by date or content type to manage their vast number of articles efficiently.

Actionable Tips for Sitemap Optimisation

To ensure your sitemap is a powerful asset, follow these best practices:

- Clean Your Sitemap: Regularly scan for and remove any URLs that return 4xx or 5xx status codes to prevent crawlers from visiting dead ends.

- Use Canonical URLs: Every URL listed in your sitemap must match the canonical URL for that page exactly, including the protocol (http vs. https) and domain version (www vs. non-www).

- Automate Submission: Use plugins or scripts to automatically generate and resubmit your sitemap whenever significant content changes occur.

- Monitor Coverage Reports: Keep a close eye on the "Sitemaps" report in Google Search Console. This tool provides invaluable data on how many submitted URLs are indexed and highlights any errors that need addressing.

- Leverage Sitemap Index Files: If your site has more than 50,000 URLs or your sitemap file exceeds 50MB, use a sitemap index file to manage multiple sitemaps effectively. This keeps them organised and easier for search engines to process.

2. Robots.txt File Configuration and Crawl Budget Management

The robots.txt file is a simple yet powerful text file located in your website's root directory. It instructs search engine crawlers which pages or sections they should or should not crawl. Correctly configuring this file is a cornerstone of any technical seo audit checklist, as it is critical for managing crawl budget, protecting sensitive areas of your site, and preventing search engines from wasting resources on low-value pages.

A misconfigured robots.txt file can have severe consequences, from accidentally blocking your entire site from search engines to allowing them to crawl and index private or duplicate content. The goal is to guide crawlers towards your most important content and away from URLs that offer little to no SEO value, such as internal search results, admin login pages, or user-specific directories. This strategic guidance helps search engines understand and index your site more efficiently.

Real-World Examples

Large, complex websites provide excellent examples of effective robots.txt usage. For instance, e-commerce sites like Amazon often block faceted navigation URLs (e.g., filtered by price or colour) to prevent an explosion of duplicate or thin content pages. Wikipedia strategically blocks user talk pages and edit histories to focus its immense crawl budget on its core encyclopaedic articles. Similarly, Reddit uses its robots.txt file to prevent the crawling of user-specific feeds and session-based URLs that serve no purpose in search results.

Actionable Tips for Robots.txt Optimisation

To ensure your robots.txt file is working for you, not against you, follow these best practices:

- Never Block Rendering Resources: Ensure you are not disallowing access to crucial CSS, JavaScript, or image files. Google needs to render pages like a human user to understand them fully.

- Test Before Deploying: Always use a tool like Google Search Console's robots.txt Tester to validate changes before making them live. This helps you avoid costly mistakes.

- Include Your Sitemap: Add a line at the bottom of your robots.txt file referencing your XML sitemap (e.g.,

Sitemap: https://www.yourdomain.co.uk/sitemap.xml). - Use 'noindex' for Indexing Control: Remember that

Disallowin robots.txt does not prevent indexing if a page is linked to externally. To prevent a page from appearing in search results, use a meta robots "noindex" tag instead. - Block Parameter-Based URLs: If URL parameters create duplicate content (e.g., from tracking or filtering), use a directive like

Disallow: /*?to prevent crawlers from accessing them.

3. Page Speed and Core Web Vitals Optimisation

Page speed refers to how quickly content loads on your webpage, while Core Web Vitals are specific user-centric metrics that Google uses to measure real-world user experience. These metrics, Largest Contentful Paint (LCP), Interaction to Next Paint (INP), and Cumulative Layout Shift (CLS), are confirmed ranking factors. A fundamental part of any technical seo audit checklist involves measuring these vitals, identifying performance bottlenecks, and implementing targeted improvements for both mobile and desktop users.

A faster, more responsive site directly correlates with better user engagement and higher conversion rates. By prioritising page speed, you not only cater to search engine algorithms but also provide a superior experience for your visitors, reducing bounce rates and encouraging repeat visits. Tools like Google PageSpeed Insights and Lighthouse are essential for diagnosing performance issues.

Real-World Examples

The impact of optimising Core Web Vitals is well-documented. Vodafone improved its LCP by 31%, which led to an 8% increase in sales. Similarly, Tokopedia reduced its LCP from 5.5 seconds to just 1.9 seconds and saw a corresponding 35% increase in organic traffic. More recently, Redbus focused on improving INP, resulting in a 7% uplift in sales, demonstrating the direct commercial benefits of a faster user experience.

Actionable Tips for Page Speed Optimisation

To enhance your site's performance and Core Web Vitals scores, focus on these high-impact actions:

- Optimise and Compress Images: Use modern formats like WebP or AVIF and implement lazy loading for images and videos that appear below the fold.

- Minify Code: Reduce the file size of your CSS, JavaScript, and HTML by removing unnecessary characters, comments, and white space.

- Eliminate Render-Blocking Resources: Defer non-critical CSS and JavaScript to ensure the main content of the page can be rendered by the browser as quickly as possible.

- Set Explicit Image Dimensions: Prevent layout shifts (CLS) by always including

widthandheightattributes on your image and video elements. - Leverage Browser Caching: Instruct browsers to store static assets locally by setting appropriate cache-control headers, speeding up load times for return visitors. To effectively track these improvements, you can learn more about mastering SEO analytics for better results.

- Use a Content Delivery Network (CDN): Serve static resources from servers located closer to your users, significantly reducing latency and improving load times globally.

4. Mobile-Friendliness and Mobile-First Indexing Compliance

Since Google completed its switch to mobile-first indexing, the mobile version of your website is now the primary version for indexing and ranking. Mobile-friendliness is no longer a recommendation but a core requirement for search visibility. An essential part of any technical seo audit checklist is a thorough verification that your site offers a seamless, fully functional experience on mobile devices and that its content mirrors the desktop version.

A mobile-compliant site ensures that crawlers can access and render all content without issue, just as they would on a desktop. This means that important text, links, and structured data must be present and identical across both versions. Adhering to modern website design best practices is fundamental for ensuring your site is fully compliant and provides an optimal user experience across all devices.

Real-World Examples

The impact of a mobile-first approach is well-documented. When The Guardian switched to a responsive design, it saw a 44% increase in mobile users, demonstrating the power of a unified experience. Similarly, IKEA embraced responsive design and witnessed its mobile sales grow by 45%. These examples highlight that prioritising the mobile experience directly correlates with user engagement and commercial success.

Actionable Tips for Mobile Compliance

To ensure your website excels under mobile-first indexing, follow these best practices:

- Implement Responsive Design: Use a responsive framework that adapts your layout to any screen size, providing a consistent experience without needing separate mobile URLs (m-dot sites).

- Verify with Google's Tools: Regularly use Google's Mobile-Friendly Test tool for spot-checks and monitor the "Mobile Usability" report in Google Search Console for site-wide issues.

- Ensure Content Parity: Confirm that all primary content, including text, images, videos, and links, is identical on both mobile and desktop versions. Do not hide important content behind accordions that are not user-activated.

- Optimise Tap Targets: Design buttons and links with a minimum size of 48×48 pixels to make them easily tappable on smaller screens, preventing user frustration.

- Maintain Structured Data: Ensure the same structured data markup is present on your mobile site as on your desktop site to provide rich context to search engines.

- Avoid Intrusive Interstitials: Remove large pop-ups that cover main content on mobile, as they violate Google's guidelines and create a poor user experience.

5. HTTPS and SSL Certificate Implementation

HTTPS (Hypertext Transfer Protocol Secure) is the secure protocol through which data is sent between a user's browser and your website. It uses an SSL/TLS certificate to encrypt this data, protecting sensitive information and building user trust. Since Google confirmed HTTPS as a ranking signal and browsers like Chrome now flag HTTP sites as 'Not Secure', verifying its correct implementation is a non-negotiable part of any technical seo audit checklist.

A thorough audit involves more than just having an SSL certificate. It requires ensuring every page and resource loads securely over HTTPS, all non-secure versions redirect correctly, and there are no mixed content issues. These problems can create security warnings for users and dilute SEO value by creating duplicate content issues or redirect chains. To secure your site and build trust, understanding why your website needs an SSL certificate is paramount. You can learn more about the importance of SSL and its benefits.

Real-World Examples

Many major websites have demonstrated the benefits of a secure migration. In 2015, Wikipedia moved entirely to HTTPS to protect user privacy and enhance trust, a massive undertaking for such a vast site. Similarly, when Brian Dean's Backlinko site migrated, he noted an improvement in keyword rankings, reinforcing the SEO benefit. Etsy also highlighted their migration to HTTPS as one of their most crucial infrastructure projects, prioritising user security for their e-commerce platform.

Actionable Tips for HTTPS and SSL Optimisation

To ensure your site is fully secure and optimised for search engines, follow these best practices:

- Use Permanent Redirects: Implement site-wide 301 redirects to direct all HTTP traffic and link equity to the corresponding HTTPS version of each page.

- Fix Mixed Content Issues: Scan your site for any resources like images, scripts, or CSS files loading over HTTP on an HTTPS page and update their URLs to be secure.

- Update All Internal References: Change all internal links, canonical tags, hreflang tags, and structured data to reference the HTTPS URLs directly. This prevents unnecessary redirect hops.

- Configure Google Search Console: Add the HTTPS version of your site as a new property in Google Search Console and submit your updated, HTTPS-based XML sitemap.

- Implement HSTS: Once you are confident your HTTPS setup is flawless, implement the HSTS (HTTP Strict Transport Security) header to instruct browsers to only request your site over a secure connection.

6. Structured Data and Schema Markup Implementation

Structured data is a standardised code format, primarily using Schema.org vocabulary, that you add to your website's HTML. It acts as a direct line of communication with search engines, helping them to precisely understand your page's content. This enhanced understanding enables search engines to display your content as "rich results," such as featured snippets, knowledge panels, and other enhanced search features. Verifying that the correct schema is implemented without errors is a vital part of any technical seo audit checklist.

Correctly implemented structured data can significantly improve your click-through rates by making your search listings more visually appealing and informative. Your audit must ensure that the schema type is appropriate for the content, matches what is visible on the page, and is free from validation errors according to Google's guidelines.

Real-World Examples

The power of schema is visible across the web. Recipe sites like AllRecipes use Recipe schema to show cooking times and user ratings directly in search results. E-commerce leaders such as Best Buy leverage Product schema to display prices, stock availability, and reviews, enticing potential buyers. Similarly, event platforms like Eventbrite use Event schema to highlight dates and locations, while local businesses can greatly benefit from LocalBusiness schema to enhance their visibility in local search and Google Maps. For more insights, you can learn more about local schema tactics for small businesses.

Actionable Tips for Schema Implementation

To ensure your structured data effectively boosts your SEO, follow these best practices:

- Use JSON-LD: Implement schema using the JSON-LD format within the

<head>section of your HTML. It's Google's recommended format and is the easiest to manage. - Start with Foundational Schema: Implement

OrganizationandWebSiteschema on your homepage to establish your brand's core identity with search engines. - Be Content-Specific: Use specific schema types like

Articlefor blog posts,Productfor e-commerce pages,FAQfor question-and-answer sections, andBreadcrumbListto improve site navigation display. - Validate and Test: Always use Google’s Rich Results Test and the Schema Markup Validator to check your code for errors before and after deployment.

- Monitor Performance: Regularly check the "Enhancements" reports in Google Search Console to monitor the performance of your marked-up pages and identify any issues flagged by Google.

- Ensure Content Parity: The content within your schema markup must match the content visible to users on the page. Marking up hidden content violates Google's guidelines.

7. Canonical Tag Implementation and Duplicate Content Management

Canonical tags (rel="canonical") are crucial HTML elements that tell search engines the preferred or "master" version of a webpage when multiple URLs contain similar or identical content. They consolidate indexing signals and link equity to a single URL, preventing duplicate content issues that can dilute your rankings and confuse crawlers. A core part of any technical seo audit checklist is identifying duplicate content scenarios and verifying that canonical tags are implemented correctly.

Without proper canonicalisation, search engines may index multiple versions of the same page, splitting your SEO value across different URLs. This often occurs with e-commerce filters, tracking parameters, and content syndication. Correctly implemented canonicals ensure that all ranking power is directed to your chosen page, strengthening its authority.

Real-World Examples

E-commerce giants like Amazon effectively use canonical tags to manage product variations. A URL for a specific colour or size of a t-shirt will have a canonical tag pointing back to the main product page, consolidating all value there. Similarly, Zappos canonicalises filtered and sorted category URLs (e.g., ?sort=price_ascending) back to the primary category page. Content sites like Medium utilise cross-domain canonicals when an article is republished from another source, correctly attributing authority to the original author.

Actionable Tips for Canonical Tag Implementation

To effectively manage duplicate content and consolidate authority, follow these best practices:

- Use Absolute URLs: Always specify the full, absolute URL in your canonical tags (e.g.,

https://www.example.co.uk/page) rather than relative paths (/page) to avoid misinterpretation. - Implement Self-Referencing Canonicals: Every indexable page should have a canonical tag that points to itself. This is a clear signal to search engines that the page is the master version.

- Ensure Canonical URL is Indexable: The URL specified in the canonical tag must return a 200 (OK) status code and must not be blocked by robots.txt or have a "noindex" tag.

- Avoid Chaining Canonicals: Do not point a canonical from Page A to Page B, which then has a canonical to Page C. Always point directly to the final, definitive URL (A → C). Correctly managing these signals is a key component when you learn more about boosting your online presence on amaxmarketing.co.uk.

- Check for Conflicting Signals: Ensure your canonical tags do not conflict with other signals like hreflang, pagination tags (rel=prev/next), or sitemap entries. Consistency is key for search engine understanding.

8. Crawl Error Detection and Broken Link Remediation

Crawl errors occur when search engine bots try to access pages on your website but fail due to issues like 404 "Not Found" errors, server problems, or redirect chains. Broken links, both internal and external, degrade user experience and waste valuable crawl budget, signalling poor site maintenance to search engines. A fundamental part of any technical seo audit checklist is identifying, prioritising, and resolving these issues to ensure a smooth path for crawlers and users alike.

Effectively managing crawl errors involves using tools like Google Search Console and dedicated crawlers to find problematic URLs. Once identified, the goal is to implement the correct fix, whether that's a 301 redirect for moved content, a 410 "Gone" status for permanently deleted pages, or correcting a simple typo in an internal link. This process protects your link equity, improves site usability, and helps search engines index your valuable content more efficiently.

Real-World Examples

Many large e-commerce sites proactively manage this by automatically redirecting discontinued product pages to the relevant category page, preventing users from hitting a dead end. Similarly, news publishers frequently use a 410 status code for outdated articles they remove permanently, clearly telling Google not to crawl that URL again. When Moz systematically fixed 404 errors pointing to high-value blog posts, they recovered significant organic traffic by redirecting the broken links to relevant, live content.

Actionable Tips for Crawl Error Remediation

To maintain a healthy, crawlable website, integrate these practices into your routine:

- Monitor Coverage Reports: Regularly check the "Pages" report (formerly Coverage) in Google Search Console to catch 4xx and 5xx errors as they appear.

- Prioritise High-Impact Fixes: Use a crawler like Screaming Frog or Sitebulb to find broken links and prioritise fixing those on pages that receive high traffic or have valuable external backlinks.

- Implement Correct Redirects: Use permanent 301 redirects for content that has moved. For content that is permanently deleted and has no suitable replacement, serve a 410 status code.

- Fix Redirect Chains: Eliminate unnecessarily long redirect chains (e.g., A → B → C). Update the initial link to point directly to the final destination (A → C) to speed up crawling and preserve link equity.

- Create a Helpful Custom 404 Page: Design a custom 404 page that helps users find what they are looking for by including a search bar, links to popular pages, and clear navigation.

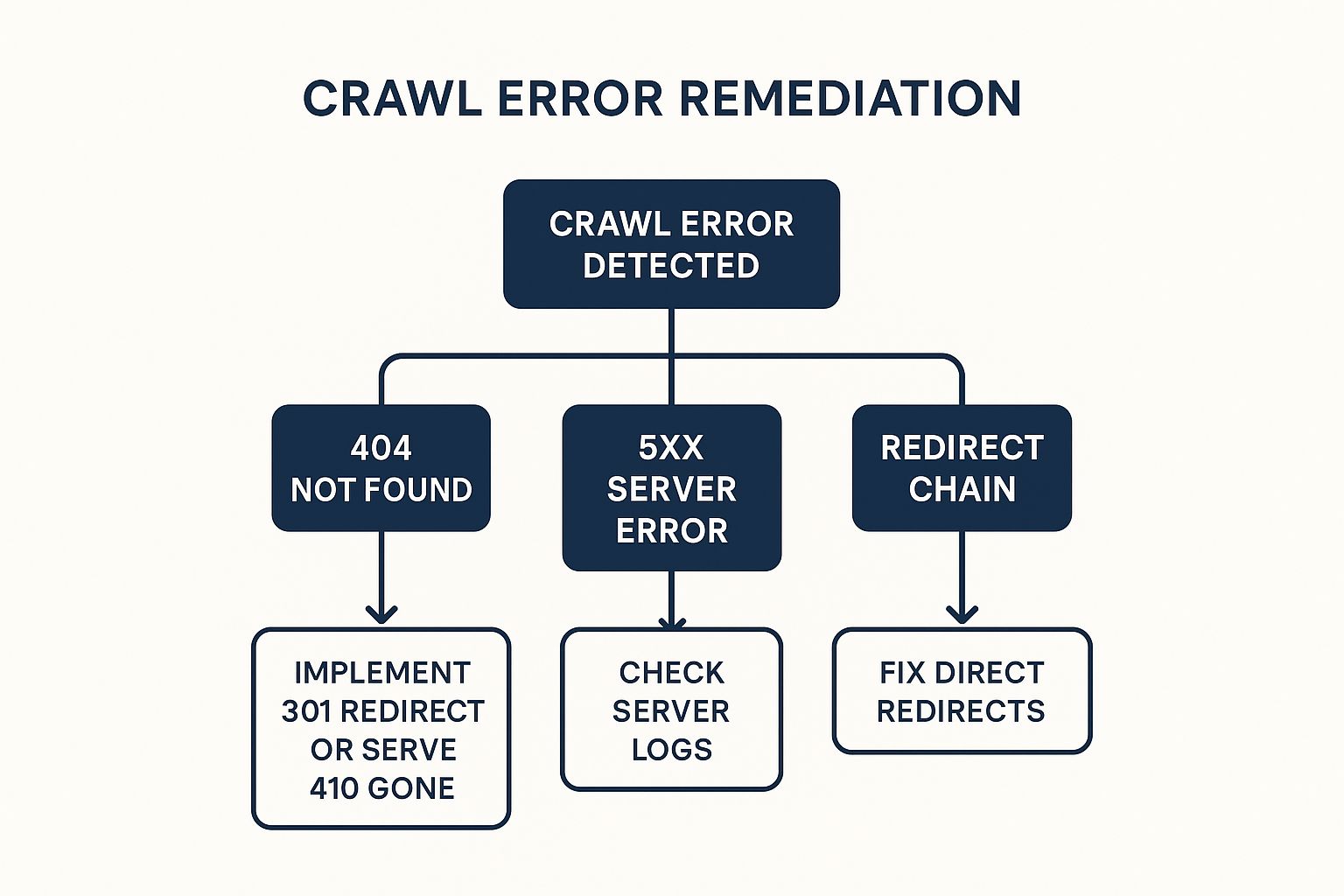

9. Crawl Error Resolution

Crawl errors occur when a search engine bot tries to access a page on your website but fails. These errors can prevent your pages from being indexed and negatively impact user experience, making their resolution a critical part of any technical seo audit checklist. Monitoring and fixing these issues ensures that search engines can access your content efficiently and that visitors don't land on broken or inaccessible pages.

Systematically addressing crawl errors helps preserve your crawl budget. When search engine bots encounter fewer errors, they can spend more time discovering and indexing your valuable content rather than wasting resources on dead ends. A clean crawl report signals a well-maintained, high-quality website to search engines.

The following decision tree provides a clear workflow for diagnosing and resolving the most common types of crawl errors you might encounter.

This visual guide simplifies troubleshooting by outlining a step-by-step process, from identifying the error type to implementing the correct fix.

Real-World Examples

A large e-commerce site might discover hundreds of 404 errors for discontinued product pages after a site migration. Instead of leaving these as broken links, they would implement 301 redirects to the most relevant new product category pages, preserving link equity and guiding users correctly. Similarly, a news publisher encountering intermittent 5xx server errors might investigate their server logs to find that a faulty plugin is causing timeouts during peak traffic, allowing them to disable it and restore site stability.

Actionable Tips for Resolving Crawl Errors

To effectively manage and fix crawl errors, adopt a proactive approach:

- Regularly Monitor Google Search Console: The "Pages" report (formerly Coverage report) is your primary source for identifying crawl errors. Check this report weekly to catch problems early.

- Prioritise High-Impact Errors: Focus first on fixing errors affecting your most important pages, such as key service pages, top-selling products, or high-traffic blog posts.

- Investigate 404s: For "Not Found" errors, determine if the page was intentionally removed or if it's a broken internal link. If the content has moved, implement a 301 redirect. If it's permanently gone, consider a 410 "Gone" status code.

- Troubleshoot Server Errors (5xx): These errors indicate a problem with your server. Check your server logs, consult with your hosting provider, and review recent site changes or plugin updates that might be causing instability.

- Update Internal Links: After fixing an error by redirecting a URL, always update the internal links pointing to the old URL. This eliminates unnecessary redirect chains and improves crawl efficiency.

Technical SEO Audit Checklist Comparison

| Item | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| XML Sitemap Optimization and Submission | Moderate | Low to moderate (automation helps) | Improved crawl efficiency and faster indexing | Large sites, complex architectures | Enhances crawl guidance and indexing speed |

| Robots.txt Configuration and Crawl Budget Management | Low | Low | Controlled crawler access and optimized crawl budget | All sites needing crawl control | Reduces server load and protects sensitive areas |

| Page Speed and Core Web Vitals Optimization | High | High (development and testing) | Better rankings, improved user experience | Sites prioritizing UX and rankings | Direct ranking factor and measurable user benefits |

| Mobile-Friendliness and Mobile-First Indexing Compliance | Moderate to high | Moderate to high | Improved mobile ranking and user experience | Sites with significant mobile traffic | Essential for mobile-first indexing and UX |

| HTTPS and SSL Certificate Implementation | Moderate | Low to moderate | Secure connections and SEO ranking boost | All sites, especially e-commerce | Builds trust, secures data, and improves rankings |

| Structured Data and Schema Markup Implementation | Moderate to high | Moderate | Enhanced rich search results and higher CTR | Content-rich and e-commerce sites | Enables rich snippets and improved SERP features |

| Canonical Tag Implementation and Duplicate Content Management | Low to moderate | Low to moderate | Reduced duplicate content issues, consolidated ranking | Sites with duplicate or parameterized URLs | Consolidates link equity and manages duplicates |

| Crawl Error Detection and Broken Link Remediation | Moderate | Moderate | Improved crawl efficiency and user experience | All sites, especially large or aging ones | Preserves link equity and improves site health |

Turning Your Audit into Actionable SEO Wins

You have now journeyed through the intricate, yet essential, components of a comprehensive technical SEO audit checklist. From optimising XML sitemaps to managing crawl budgets with a precise robots.txt file, and from enhancing Core Web Vitals to securing your site with HTTPS, each item on this list represents a critical lever you can pull to improve your website's performance in search engine results. Completing the audit is a monumental first step, but the true transformation begins now, in the implementation phase.

The insights you have gathered are not merely diagnostic data; they are the blueprint for building a faster, more secure, and more search-engine-friendly website. The real value of this technical SEO audit checklist is realised when you translate your findings into a prioritised action plan. Think of your website as a physical structure. You have just completed a thorough inspection, identifying everything from minor cosmetic issues to potential foundational weaknesses. Now it is time to call in the builders.

Prioritising Your Technical SEO Fixes

The sheer volume of tasks can feel overwhelming, but a strategic approach makes it manageable. Not all fixes carry the same weight. Your priority should be to address the issues that have the most significant impact on user experience and search engine crawling.

Start by focusing on what we can call the "Critical Impact Quadrant":

- Resolve Major Crawl Errors: Begin with any 4xx and 5xx errors you uncovered. A user or search bot hitting a broken page is a dead end, directly harming user experience and wasting your crawl budget. Fixing broken links and server errors is a high-priority, foundational task.

- Enhance Core Web Vitals: Page speed is no longer a suggestion; it is a core ranking factor. Poor LCP, FID (or INP), and CLS scores can deter visitors and suppress your rankings. Addressing slow-loading resources, optimising images, and improving server response times offers a direct and measurable benefit.

- Ensure Full HTTPS Security: If your site isn't fully secure, or has mixed content issues, this is a non-negotiable fix. Security is a baseline expectation for both users and search engines like Google.

- Correct Indexability Issues: Make sure your most important pages are not blocked by

noindextags or yourrobots.txtfile. Conversely, ensure low-value pages (like admin logins or thank-you pages) are correctly excluded. Proper indexability control is fundamental to showing up in search results.

Once these critical items are addressed, you can move onto the equally important, but often less urgent, tasks. This includes refining your structured data implementation, resolving canonical tag conflicts to consolidate link equity, and ensuring your mobile experience is flawless for mobile-first indexing.

From One-Time Audit to Ongoing Health

Mastering the elements of this technical SEO audit checklist is not about a one-time project. It is about cultivating a new mindset. Technical SEO is an ongoing discipline of monitoring, refinement, and adaptation. Search engine algorithms are constantly evolving, user expectations are always rising, and your own website will change as you add new content and features.

Key Takeaway: A technical SEO audit is not a finish line; it is the starting line for a continuous improvement cycle. Schedule regular check-ins-perhaps quarterly-to revisit this checklist and ensure your website's technical foundation remains solid and resilient.

By consistently addressing the technical details, you are not just ticking boxes. You are building a powerful, reliable foundation that amplifies every other marketing effort you undertake. Great content cannot rank if it cannot be crawled. A fantastic product cannot sell if the page is too slow to load. A strong technical base ensures that your investment in content, design, and user experience delivers its maximum possible return, leading to sustainable organic growth and a dominant online presence.

Feeling overwhelmed by the scope of your audit? Transforming a detailed checklist into a strategic roadmap requires expertise. The team at Amax Marketing specialises in conducting in-depth technical SEO audits and creating prioritised, actionable plans that drive real growth. Let us help you turn your audit findings into tangible ranking improvements with a complimentary marketing audit today.